Introduction to DeepSeek R1 Architecture

Models of deep learning like DeepSeek R1 have gained a lot of recognition in the field of artificial intelligence, and are changing how machines learn as well as interact with information. In the top of this revolution is Large Language Models (LLMs) that have proven their capability to process and create human-like language using the information they gain.

DeepSeek R1 is one of the most advanced developments in this field, and is created to push the boundaries of what LLMs are able to accomplish, particularly when dealing with difficult reasoning tasks.

What are Large Language Models?

The Large Language Models are advanced algorithms that make use of huge amounts of text information to understand, interpret and create human language. They function by analyzing patterns in massive datasets and providing pertinent and relevant responses. They make use of techniques like tokenization, which involves having language broken down into manageable pieces (tokens) and then followed by embedding, in which the tokens are transformed into numerical representations that facilitate mathematical calculations. The main goal for LLMs is not only to create text, but also to be able to recognize the subtleties of language. This allows them to accomplish a variety of tasks ranging from simple text creation to more complex interaction with others.

The Emergence of Reasoning Models

The development of LLMs has reached a point that understanding of text isn’t enough. The next step is the integration of reasoning capabilities that enable models to understand and interpret information in relation to the context. Reasoning models are created to handle multiple layers of information and draw conclusions – a capability that is comparable to the human brain’s cognitive process. The shift from basic text generation to deeper reasoning offers many possibilities for industries that require analytical abilities for medical diagnostics as well as scientific research and complex code-related tasks. The models could increase AI’s capabilities in solving problems and will be indispensable tools for both professional and daily use.

Here’s example illustrating the reasoning faculties of a new LLM:

Question:

A car is moving at 50 mph and travels for 4 hours. How far does it go?

Plain response:

The car travels 200 miles.

Response with intermediate reasoning steps:

To determine the distance traveled, we use the formula:

Distance = Speed × Time

Given that the speed is 50 mph and the time is 4 hours:

Distance = 50 mph × 4 hours = 200 miles

So, the car travels 200 miles.

This structured response showcases the model’s ability to break down a problem logically, making it easier to understand. Would you like another variation?

Introducing DeepSeek R1

DeepSeek R1 is distinct from the other versions due to its unique architecture that includes advanced components specifically designed to enhance reasoning. Based on the fundamentals of a powerful large language model, DeepSeek R1 integrates unique capabilities that allow it to handle complicated tasks with ease. The most notable attributes include its capacity to deal with the uncertainty of data interpretation as well as its unique training techniques which enhance reasoning abilities as compared to earlier models. If we dig deeper into the structure that is DeepSeek R1, we can see how it recalibrates the standard for LLMs and opens new avenues for applications in real-world situations.

Core Components of DeepSeek R1

Understanding the DeepSeek’s architecture is a matter of dissecting its key components, and each is crucial to its capacity to function as a sophisticated reasoning model. The understanding of these components helps clarify the fundamental technology that powers this innovative architecture.

Mixture-of-Experts (MoE) Design

One of the main characteristics that distinguish DeepSeek R1 is its Mixture-of-Experts (MoE) design. The architecture blends several neural networks that are referred to by the term “experts,” allowing the system to connect with the most relevant experts based on the query being input. By allowing only a small subset of experts at a given moment, DeepSeek R1 optimizes performance and reduces the computational burden and improves efficiency.

The MoE structure allows the model to divide tasks to various networks, which ensures that complex problems related to reasoning are addressed by experts with the appropriate knowledge. For instance, if an individual asks for help that is related to mathematical logic the model will activate specialists who have been specifically trained in that field. This specialized activation can lead not just to quicker responses but also a greater precision in the performance of tasks.

Multi-Token Prediction (MTP)

Another key aspect in an integral part of the DeepSeek R1 architecture is the Multi-Token Prediction (MTP) mechanism. Traditional LLMs typically process inputs in a sequential manner which causes slowdowns in the efficiency. The MTP function lets DeepSeek R1 to forecast multiple tokens at the same time. This ability to process multiple tokens simultaneously increases the speed of creating text since it is able to analyze and synthesize several pieces of information in one pass.

Furthermore, MTP enhances the quality of responses because it allows the model to take into account an entire context when interpreting. It expands the limits of how many tokens could be predicted simultaneously which allows DeepSeek R1 to generate sophisticated outputs that require intricate relationships in the text. This improves LLM capabilities, resulting in significant improvements over the previous techniques.

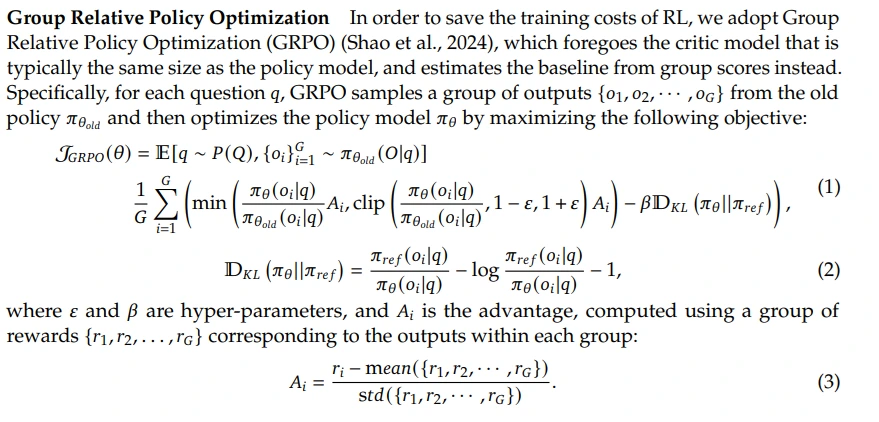

GRPO Reinforcement Learning Algorithm

To further improve and further refine the DeepSeek R1 architecture, the Group Relative Policy Optimization (GRPO) algorithm is utilized. Contrary to traditional reinforcement-learning methods which typically rely on reward signals that are explicit for the learning process, GRPO introduces a new approach that enhances the capacity of the model to reason without the need for an additional reward model.

This unique approach enables DeepSeek R1 to increase its reasoning abilities by interfacing with a vast variety of contextual data and learning to improve the efficiency of its decision-making process. By adapting its strategies in accordance with the performance of various strategies, GRPO enables the model to improve its understanding of the complex tasks it faces and opens the way to applications that require high levels of analysis and critical thinking.

Training Methodology and Practical Applications

The efficiency that is DeepSeek R1 can be attributed to its distinct training methodology that combines modern techniques designed to develop advanced reasoning abilities. Understanding these educational strategies provides an understanding of the model’s use across a variety of areas.

Training Approach: A Blend of SFT and RL

DeepSeek R1 employs an extensive training method which combines Supervised Fine Tuning (SFT) along with Reinforcement learning (RL). The SFT stage includes training the machine using an extremely high-quality data set, and with the goal of teaching it to comprehend and create text that is based on patterns established. The process provides the model with a solid knowledge of the base.

After following the SFT stage, RL techniques are introduced which allow the model to change to improve performance by interactions. Through the use of GRPO self-sampling strategies in the training phase, DeepSeek R1 can learn the most efficient ways to solve complex problems. This method of blended learning improves the capacity to reason, but helps to gain a complete understanding of the real world context.

Applications of DeepSeek R1 in Real-World Scenarios

The applications that could be made by DeepSeek R1 are vast and broad, covering a variety of sectors. For instance, in the area that of programming DeepSeek R1 will assist programmers by offering coding advice for debugging, help with debugging, and optimization tips, while also ensuring that it is aware of the logic that is involved in tasks.

In the context of education it is well-suited for solving math-related issues that are complex as well as helping students grasp complex concepts by breaking them down into simple steps. Puzzles with logic and language are also a good fit for DeepSeek R1, enabling it to show advanced reasoning, while also increasing the user’s engagement by interacting.

In the end, the flexibility of DeepSeek R1 can be seen in its ability to tackle a variety different tasks that demand analytical abilities, which makes it an indispensable tool across the spectrum of technology development to education.

Future Directions and Enhancements

While the area of AI develops and grow, so is the possibility of enhancing this DeepSeek R1 architecture. The ongoing research will likely concentrate upon increasing this MoE design to incorporate an even greater number of experts, providing better comprehension of context. The methods for training can be adapted to incorporate more recent methods, like unsupervised learning. This could add more layers of data hierarchy as well as context recognition.

In addition, increasing user interaction and real-time adaptiveness is vital. The future versions of DeepSeek R1 could aim for seamless integration with other systems, which will allow collaboration AI designs in which different models can share their expertise to solve a myriad of questions.

When DeepSeek R1 as well as its succeeding versions advance will impact many areas, and will enhance the ability that AI systems to think, change, and invent as they respond to human needs.

Conclusion

DeepSeek R1 represents a significant technological advancement in artificial intelligence, specifically in the field of reasoning models. Through harnessing the incredibly powerful elements that comprise MoE, the MoE model, MTP capabilities, and the GRPO reinforcement learning algorithm, DeepSeek R1 sets a new standard for what large-language models can accomplish.

It is equipped with an innovative learning technique and an array of possibilities for applications DeepSeek R1 will not be simply a new step in LLM technology, but it is a revolutionary factor in how AI assists users with difficult reasoning tasks. As we explore the possibilities of AI it is crucial to encourage responsible AI development, making sure the creation of these powerful tools responsibly and integrated into society to benefit everyone.

1 thought on “A Beginner’s Guide to DeepSeek R1 Architecture: Simplifying Complex Reasoning Models”