🚀🚀 The Future of Recruitment is Here!

Traditional software architectures rely on predefined rules, conditional logic, and static workflows to process data and automate tasks. However, these systems often lack Flexibility, adaptability, and accurate intelligence—especially in dynamic fields like recruitment and HR as explained in my precedent article: The Agentic AI Revolution: Redefining Application Development .

🔹 The Rise of AI Agents in Automation

Instead of writing complex business logic manually, AI agents can dynamically process data, learn from interactions, and make decisions autonomously. AI-powered agents replace the traditional logic layer with an adaptive and intelligent system capable of:

✅ Extracting structured data from unstructured sources (like CVs).

✅ Understanding natural language queries in a chatbot interface.

✅ Improving over time by leveraging memory and machine learning models.

🔹 How AI Agents Are Transforming HR & Recruitment

With AI-driven automation, recruiters can process thousands of CVs in minutes, instantly extract relevant details, and ask natural-language questions about candidates—just like a human assistant.

In this tutorial, we will build an AI-powered recruitment system that:

✅ Uploads PDF CVs via a web interface.

✅ Extract candidate details (name, email, skills) using CrewAI Agents.

✅ Stores data in a PostgreSQL database for structured analysis.

✅ Allows recruiters to query the database via an intelligent chatbot.

This three-layer application will be built using:

✅ Frontend: HTML, JavaScript (AJAX, Bootstrap).

✅ Backend: Python (Flask, CrewAI).

✅ Database: PostgreSQL (psycopg2).

🚀 Let us dive in and start building the future of recruitment automation!

Note: This project is a proof of concept (POC) designed to showcase AI-driven recruitment automation; further enhancements can improve scalability and accuracy. 🚀

Step 1: Understanding the Three-Layer Architecture

A well-structured application follows the three-layer architecture, which ensures scalability, maintainability, and Flexibility by separating the user interface, business logic, and data storage into distinct layers. This modular approach allows each layer to evolve independently, making the system easier to manage, scale, and extend.

In our AI-powered CV processing system, each layer ensures seamless data flow, automation, and user interaction.

📌 1.1 Layers of Our AI-Powered System

1️⃣ Presentation Layer (Frontend)

📌 Tools Used: HTML5, JavaScript (AJAX, Fetch API), Bootstrap

📌 Primary Role:

The front end is the user-facing interface that enables recruiters to interact with the system. This includes:

✅ Uploading CVs via a simple file upload form.

✅ Displaying chatbot interaction where users can ask questions about candidates.

✅ Sending requests to the backend via AJAX and processing responses dynamically.

📌 Why is it important?

- User Experience (UX): A clean, intuitive UI makes the system easy to use.

- Asynchronous Processing: AJAX ensures smooth interactions without page reloads.

- Scalability: The frontend can be expanded or replaced (e.g., upgrading to React, Vue.js, or a mobile app) without affecting the backend.

📌 Example Interaction:

A recruiter visits the web app, uploads a CV, and later asks:

🗣️ “Who are the top Python developers in my database?”

The chatbot retrieves relevant candidate profiles and displays the results.

2️⃣ Logic Layer (Backend)

📌 Tools Used: Python (Flask, CrewAI)

📌 Primary Role:

The backend is the brain of the system. It handles:

✅ Receiving CV uploads and processing them.

✅ Extracting relevant information (name, email, skills) using CrewAI Agents.

✅ Storing extracted data in the database.

✅ Handling chatbot queries by retrieving relevant candidate data.

📌 Why We Use AI Agents Instead of Traditional Logic?

Traditional backend logic uses fixed rules and keyword-based parsing to extract document data. However, AI agents can understand context, learn from data, and make intelligent decisions, making them far more effective for recruitment tasks.

Traditional Approach (Manual Parsing):

- Regular expressions (regex) extract candidate names and emails.

- Hardcoded rules categorize skills (e.g., if “Python” in-text → add to skills).

AI-Powered Approach (CrewAI Agents):

- Uses Large Language Models (LLMs) to analyze CVs intelligently.

- Understand complex job descriptions and infer skills even if they are not explicitly listed.

- Can adapt and improve over time based on past extractions.

📌 Example CrewAI Workflow:

1️⃣ A CV Processor Agent reads a PDF CV and extracts:

- Name: John Doe

- Email: johndoe@gmail.com

- Skills: Python, Machine Learning, Data Science

- 2️⃣ The extracted data is stored in PostgreSQL.

- 3️⃣ A Chatbot Agent answers recruiter queries based on this structured data.

3️⃣ Persistence Layer (Database)

📌 Tools Used: PostgreSQL, psycopg2 (Python Library for Database Interaction)

📌 Primary Role:

The persistence layer is the data storage system that keeps a structured record of extracted candidate information. This layer ensures that data is:

✅ Securely stored in a structured format.

✅ Easily retrievable for chatbot queries.

✅ Optimized for scalability, allowing thousands of CVs to be stored efficiently.

📌 Why PostgreSQL?

- SQL-based: Allows complex queries like finding candidates with specific skills.

- Scalable: Can handle thousands of records without performance issues.

- Reliable: ACID-compliant transactions ensure data integrity.

📌 Example Data Stored in the Database

IDNameEmailSkills

1 John Doe johndoe@gmail.com Python, Machine Learning, AI

2 Jane Smith janesmith@email.com JavaScript, React, Node.js

📌 Example Query (Find all Python developers):

SELECT * FROM candidates WHERE skills LIKE ‘%Python%’;

📌 How the Chatbot Uses This Data

A recruiter asks:

🗣️ “Who are the Python developers?”

🔹 The chatbot runs the SQL query, retrieves relevant candidates, and displays:

📝 “John Doe and Jane Smith have Python skills.”

🎯 Summary: Why This Architecture Works Best

✅ Separation of Concerns → Each layer is independent and scalable.

✅ Flexibility → The AI-powered backend can evolve without affecting the frontend or database.

✅ Efficiency → The AI agents eliminate manual data entry and retrieval for recruiters.

🔥 Up Next: Setting Up the Development Environment & Writing the Backend Code! 🚀

🔹 Step 2: Setting Up Your Development Environment

📌 2.1 Install Required Software

🔹 Install Python and Pip

- Check if Python is installed with bash: Python –version. If not installed, download it from the Python Official Site.

- Ensure Pip is installed: Python -m ensurepip –default-pip

🔹 Install PostgreSQL

- Download PostgreSQL: Click here

- Install PostgreSQL and set a password for the ‘postgres’ user.

- Open pgAdmin4 and create a new database called candidates.

📌 2.2 Install Required Python Libraries

In Command Prompt (cmd), run:

pip install flask crewai psycopg2-binary pypdf2 gunicorn flask-cors

🔹 Library Breakdown:

✅ flask → Web framework for the API

✅ crewai → AI agent framework

✅ psycopg2-binary → PostgreSQL adapter for Python

✅ pypdf2 → Extracts text from PDFs

✅ gunicorn → Production WSGI server

✅ flask-cors → Enables frontend-backend communication

🔹 Step 3: Building the Backend (Flask + CrewAI)

📌 3.1 Create a Flask API

Create a new Python file app.py and add:

import os

from crewai import Agent, Task, Crew, Process

from crewai.llms import OpenAI

from flask import Flask, request, jsonify

from flask_cors import CORS

import psycopg2

from werkzeug.utils import secure_filename

# Set OpenAI API Key

os.environ[“OPENAI_API_KEY”] = “sk-xxxxxxxxxxxxxxxx”

# Initialize Flask app

app = Flask(__name__)

CORS(app)

UPLOAD_FOLDER = ‘uploads’

app.config[‘UPLOAD_FOLDER’] = UPLOAD_FOLDER

# PostgreSQL connection

DB_CONFIG = {

‘dbname’: ‘candidates’,

‘user’: ‘postgres’,

‘password’: ‘yourpassword’,

‘host’: ‘localhost’,

‘port’: ‘5432’

}

conn = psycopg2.connect(**DB_CONFIG)

cursor = conn.cursor()

# Ensure the upload folder exists

if not os.path.exists(UPLOAD_FOLDER):

os.makedirs(UPLOAD_FOLDER)

📌 3.2 Handle PDF Uploads and Extract Data

🔹 How It Works

- Users upload CVs via the web interface.

- Flask receives the file, saves it, and sends it to CrewAI for processing.

- CrewAI extracts candidate data with a personalized tool and saves it to PostgreSQL.

@app.route(‘/upload’, methods=[‘POST’])

def upload_cv():

“”” Handles CV Upload and Extraction”””

if ‘files’ not in request.files:

return jsonify({‘message’: ‘No file uploaded’}), 400

files = request.files.getlist(‘files’)

for file in files:

filename = secure_filename(file.filename)

filepath = os.path.join(app.config[‘UPLOAD_FOLDER’], filename)

file.save(filepath)

process_cv(filepath) # Process the uploaded CV

return jsonify({‘message’: ‘CVs uploaded and processed successfully’})

📌 3.3 Extract CV Data Using CrewAI

def process_cv(filepath):

# Initialize the tool with a specific PDF path for exclusive search within that document

“”” Extracts information from CV using CrewAI” “”

agent = Agent(

role=’CV Processor’,

goal=’Extract candidate details from CVs’,

verbose=True,

memory=True,

backstory= “Expert in parsing and analyzing resumes.”,

llm=’gpt-4o-mini’,

tools=[pdfScrapingTool]

)

task = Task(

description=f “Extract name, email and skills of the candidate from the PDF cv located in path ‘{filepath}’ and return structured JSON.”,

expected_output=”” “A structured JSON without comments with respect to the bellow strucure:

{{

“name”: string, // the full name of the candidat

“email”: string, // email of the candidat

“skills”: string // list of skills of the candidat

}}

“””,

tools=[pdfScrapingTool],

agent=agent

)

🔹 Step 4: Build the Frontend (HTML + JavaScript)

Create a file index.html:

<!DOCTYPE html>

<html lang=”en”>

<head>

<title>CV Uploader & Chatbot</title>

<script src=”https://cdn.jsdelivr.net/npm/axios/dist/axios.min.js”></script>

</head>

<body>

<h1>Candidate Database</h1>

<h2>Upload CVs</h2>

<form id=”upload-form”>

<input type=”file” id=”file” name=”file” accept=”.pdf” multiple>

<button type=”submit”>Upload</button>

</form>

<h2>Chat with AI</h2>

<textarea id=”chat-input” placeholder=”Ask about candidates…”></textarea>

<button id=”send-message”>Send</button>

<div id=”chat-response”></div>

<script>

document.getElementById(‘upload-form’).addEventListener(‘submit’, async (event) => {

event.preventDefault();

let files = document.getElementById(‘file’).files;

let formData = new FormData();

for (let file of files) formData.append(‘files’, file);

let response = await axios.post(‘http://127.0.0.1:5000/upload’, formData);

alert(response.data.message);

});

document.getElementById(‘send-message’).addEventListener(‘click’, async () => {

let message = document.getElementById(‘chat-input’).value;

let response = await axios.post(‘http://127.0.0.1:5000/chat’, { message });

document.getElementById(‘chat-response’).innerText = response.data.response;

});

</script>

</body>

</html>

🎯 Congratulations! 🚀 You have successfully built an AI-powered CV processing and chatbot system!

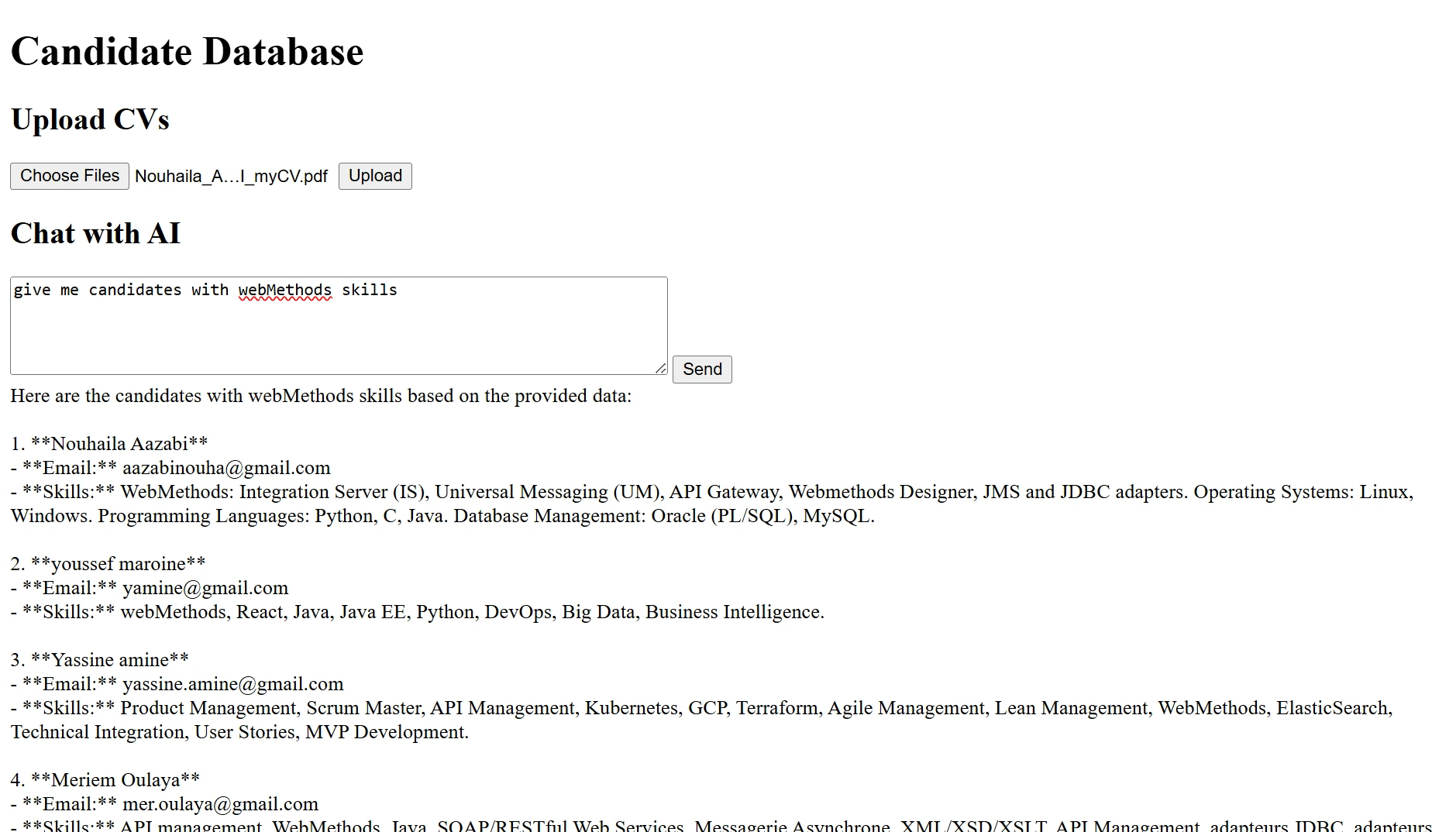

🖥️ Here is a screenshot of the application, successfully processing CVs and answering queries through the AI-powered chatbot! 🚀

📥 Download the complete source code of this AI-powered CV processing application in ZIP format and start building your own automated recruitment system! 🚀 Click here to download 🔽